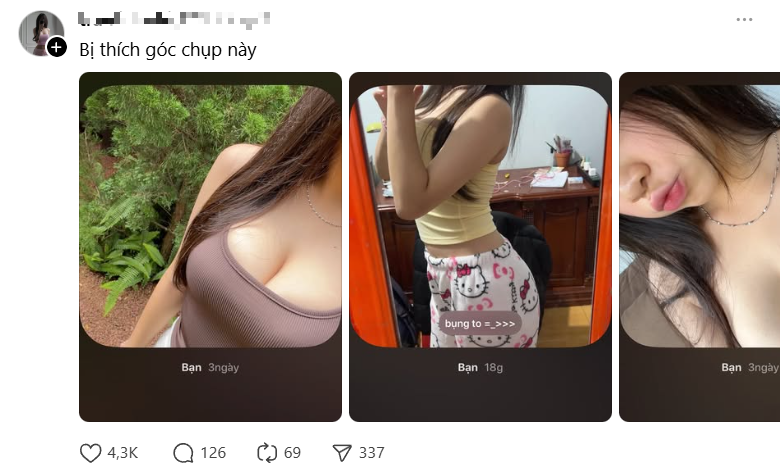

The launch of Threads has opened up a fresh and rapid communication space. However, alongside the explosion of this platform, an old phenomenon has emerged with astonishing reach. That is the proliferation of fake accounts, maximizing the use of sexy and alluring images of hot girls/beautiful girls in a “scraping” manner, effortlessly attracting a massive amount of interaction. This phenomenon raises questions about the issue of moderation technology.

The Appeal of Fake Content

Why can a account built from posts not by the original owner, with no clear source, surpass “real” accounts that have been seriously invested in?

In the early stages of a new platform like Threads, the algorithm tends to prioritize virality over authenticity. Any post that receives a large number of comments and shares in a short time is viewed by the algorithm as “high-quality content” and is promoted in the feed.

Large interactions → Algorithm boosts → Reaches more people → Interactions continue to rise, creating a loop that allows fake content, even if empty, to dominate the feed.

Using attractive images that evoke emotions such as curiosity, desire, and admiration. These emotions are the perfect “push” for users to stop, view, and interact. A second spent admiring an image is a second of success for the content creator.

Especially for male audiences, interacting with “beautiful girl” images can create a sense of belonging to a community or showcase their “aesthetic taste.” Thus, they inadvertently become channels for spreading free content, even if they have no real interest in the actual message behind the image. Fake accounts do not simply post images; they use “beautiful girl” images as a visual “bait” to conceal a sophisticated yet hollow content strategy.

Serious Consequences

The rise of fake accounts can lead to serious consequences for user experience and the social media environment in general. First and foremost, quality content, invested with time and effort, can easily be overshadowed by cheap “interaction bait,” discouraging genuine creators.

When the truth is revealed, trust in the authenticity of social media will decline, creating a suspicious and toxic environment.

Especially, fake accounts with massive interactions are ideal tools for exploitation and fraud activities. After building a large interaction base, the fake account owner can start selling ads to disreputable brands, based on this “fake” data. More dangerously, the images used are of real people but stolen, causing serious damage to their honor and personal lives.

Resisting Visual Traps

To ensure that Threads and new social platforms do not become breeding grounds for fake content, cooperation is needed from both the platform and the user community.

The platform needs to change the algorithm to prioritize not only interactions but also the verification of content origins; Users should fully utilize the verification features of the platform to distinguish legitimate accounts; Actively report fake accounts, impersonators…

The biggest change must come from user consumption behavior: Before liking or commenting, users need to take a moment to ask themselves: Is this account trustworthy? Does this story make sense? Where is this image from? Limit interactions with posts that show shocking signs and lack authentic information; “Starve” the algorithm of fake content. If users stop interacting, the algorithm will stop recommending, and the fakes will have no space to thrive.

Image: Compilation